From Course Evaluation to Quality System: The History

Starting from the creation of a course evaluation questionnaire, we have built a coherent system for quality evaluation and improvement of courses and programs at the basic and advanced levels at KI, commissioned by the Board of Education (since January 2019, the Committee for Higher Education).

In Autumn 2014, Terese Stenfors was approached by the Dean of Education at the time - Jan Olov Höög - and assigned the development of a new standardized questionnaire to be mandatory for all basic and advanced level courses at KI. Several previous attempts had been made but failed due to low response rates, lack of follow up and criticism from the teachers.

Terese had just created an evaluation unit and this project marked one of the starting points of a successful venture.

Here we describe how we, starting from the creation of a course evaluation questionnaire, have built a coherent system for quality evaluation and improvement of courses and programs at the basic and advanced levels at KI, commissioned by the Board of Education (since January 2019, the Committee for Higher Education).

Development of a three-tier course evaluation system

A key source of data for educational quality is our students. One way of gathering such data is through questionnaires distributed at the end of a course.

However, course evaluation questionnaires often have several different aims, including, for example, improvement of teaching and learning, personnel decisions and promotions, program evaluation and national quality assurance, or course selection by students. Thus, stakeholders range from individual teachers and students to policy makers at all levels.

This breadth of aims may have the consequence that neither the students nor the teachers feel it ‘speaks to their course’.

In turn, we see low response rates and little use of the data by teachers and policy makers. Standardized instruments seldom meet the needs of all stakeholders involved. Questionnaires specially designed for specific courses have the advantage of being more directly targeted and are as such perhaps more useful for course development. However, they make it impossible to compare results between courses within a program, a department or an institution, or to follow results over time.

Thus, we had to ask ourselves: How can we develop a standardized questionnaire that meets the needs of all stakeholders, i.e. that of the institution, the programs, the individual courses, teachers and students, while maintaining alignment with educational theories and empirical evidence of high quality teaching and learning?

Development of the instrument and five core constructs

The new instrument needed to be in line with KI’s institution-wide strategic plan, be useful for quality evaluation and improvement of education at the institutional and departmental, as well as at the program level, provide data applicable in the national reviews, and not least be easily applied by teachers as a tool for course development.

Initially we conducted an analysis to fully explore the experiences of the users of KI’s previous mandatory questionnaire, and the questionnaire in place before that.

We conducted interviews with faculty and administrative staff identified as key users of the prior questionnaires, or as having been involved in the development and implementation of these.

We recruited interview participants through a snowball sampling, i.e. the respondents were asked to recommend additional potential respondents.

We conducted a literature review to explore current state of the art and best practices.

Finally, we formed a project group comprising teacher representatives, the central quality committee, students, and a program coordinator, including research expertise in medical education and psychometrics (Sandra Astnell, Petter Gustavsson, Ewa Ehrenborg, Carolina Carneck, Tove Hensler).

As a first step, based on the pre study and the literature review, we launched the idea of a three-tier model.

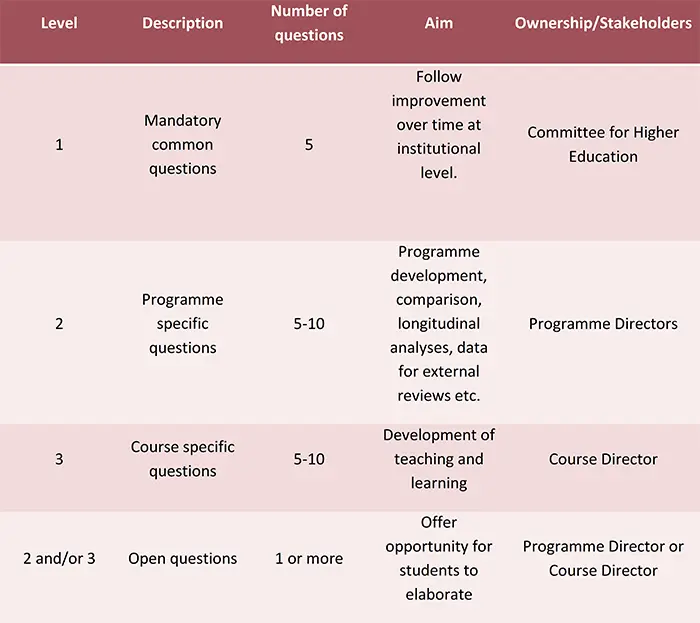

As described in Table 1, we defined the aims and stakeholders of the three different levels of the questionnaire. Thus, mandatory items could be limited to the aims and areas of interest to the university management (Committee for Higher Education), whilst items of interest to program, course or teacher aims could be included at levels two or three.

Through the interviews and literature review, together with an analysis of key policies and action plans at the university, we identified a number of core areas (constructs). We presented these proposed core constructs, together with the three-tier model, to different critical reference groups at the institution, such as the Board of Education, the Student Union, the Pedagogical Academy, staff members at the Centre for Clinical Education and the Unit for Medical Education, as well as a number of educational program boards, in order to explore whether a shared understanding of the features of the constructs existed.

The instrument was designed based partly on local policy and guidelines for education, hence, all experts involved in ensuring content validity were faculty, students or staff at the university.

We proposed that the core constructs, namely relevance of the course; fulfilment of the learning outcomes; coherence of the course; scientific focus of the course; and student engagement, should be explored in every course, as mandatory items in the questionnaire, but that program boards could add further areas of interest (constructs) to their respective programs. Similarly, course directors could add questions linked to areas of particular interest to the specific course in question.

Based on existing questionnaires in combination with our expertise in questionnaire design, we developed five items based on the identified core constructs. We asked the reference groups to assess the items with regard to whether they were written in a language that the participants understood and whether they were relevant with respect to the core constructs.

Previous research and experience have shown that if teachers, or other stakeholders, do not agree with or understand the aims of the course evaluation questionnaires that they are asked to administer and incorporate into their quality improvement, this will have a negative effect both on student response rates and on quality improvement. Thus, we emphasized stakeholder buy-in before we moved to the next step, namely testing the five items in larger student cohorts.

Testing the five items on a larger scale

To ensure that respondents would interpret the items representing the core constructs in the same way, respond accurately, and be willing and motivated to answer, we engaged approximately 530 students in a three-step pilot review:

- Cognitive interviews with 100 students.

- This step was taken to ensure response process validity. The students were presented with the items and the suggested response anchors. For half the respondents a think aloud technique was used, and for the other half we used verbal concurrent probing.

- Statistical analysis (psychometric testing) of pilot data from 404 students in 13 different courses was conducted.

- Qualitative analysis on comments added to the pilot from 150 students (a subgroup of the above students) was performed.

Data from the pilot showed acceptable inter-item correlations and high internal consistency among items, thus indicating good reliability. In the analysis, we accounted for response distributions and item interactions. We confirmed that the questions were being interpreted as intended by the students. The analysis of the questionnaire comments showed the need for an open question where students could add their viewpoints on the course.

For further detail on the pilot study, please contact us for a copy of the report.

The Board of Education (now the Committee for Higher Education) approved the new questionnaire in December 2014 and it has been gradually implemented for all courses in undergraduate and master’s programmes at the university, becoming mandatory during spring 2016.

Our team of evaluation experts analyse the data and present an annual report to the Committee for Higher Education. The report includes response rates, changes over time in the data, comparisons among programs, and the following additional comparisons: English vs. Swedish courses; mandatory vs. elective courses; and courses incorporating workplace-based learning vs. those without.

Psychometric properties such as validity do not pertain to an instrument as such; rather, they are a feature of the analysis of results generated from contextual studies. Therefore, validation is an ongoing process; to date, this includes investigating response bias due to gender, age, language of the questionnaire (Swedish or English), whether the course is campus-based or distance, and whether it includes workplace-based learning (clinical placement)1.

The psychometric properties of the five standardized questions are robust and this is reflected through items having high internal consistency and items allocated around a one-factor solution. Therefore an aggregated sum score is justified and can be used when comparing findings between programs over time at the university.

We used an Item Response Theory model (one parameter logistic model (1PL) which uses item difficulty as a parameter) to explore which questions best distinguish courses of perceived high quality from the others. A user guide was developed to help teachers and programme directors interpret their results.

We have also explored which factors predict high response rates, as low response rate is a common problem in course evaluations.

Using a multiple regression analysis we found that student interest and teacher involvement were both predictors of response rates, whilst course quality did not predict response rate above and beyond the first two predictors2.

1) Bujacz, A, Bergman, L, Stenfors, T. How to make course evaluation results useful: A multilevel validation strategy. Manuscript.

2) Bergman, L, Bujacz, A. Student interest and teacher involvement predict response rates to course evaluation surveys in higher education. Manuscript.

The question bank

A potential limitation with the three-tier model is that the number of items for each construct has to be limited; therefore single items are used for the five mandatory constructs (i.e. one question per core construct).

Therefore, we developed a question bank, a thematically organized list of items. The purpose of the question bank is to support programs and course directors in selecting questions for levels two and three (program-specific and course-specific items).

The question bank currently includes ten constructs:

- the five core constructs

- five additional constructs

We used the following method to develop the question bank:

As part of the literature review to develop the instrument, a number of existing instruments were reviewed, and all items (over 600) in all identified instruments were put into an Excel spreadsheet.

The five core constructs had already been defined, so for these, a deductive analytic process could be used, i.e., all items were sorted as to whether they were fitted within these constructs or not. For all items not fitting within the core constructs, a team of educational researchers at the Evaluation Unit conducted a thematic analysis.

Once a number of constructs had been defined and agreed upon, a larger team of researchers conducted inter-rater reliability testing; this consisted of sorting the items into the existing constructs, with the possibility of suggesting additional constructs and revising the definition of existing constructs.

Further, we developed sub-themes for all constructs. After this process was finished, we revised items not formulated correctly, removed items that were too similar and removed items not in line with KI's policy and current research in teaching and learning.

Further, the question bank was aligned with the new national and European guidelines from the Swedish Higher Education Authority and the European Association for Quality Assurance in Higher Education.

Standards and Guidelines for Quality Assurance in the European Higher Education Area

Each construct has a definition and a number of sub-themes. References are provided for further reading. The development of the question bank is an ongoing process.

When a programme or course uses items that are not already in the question bank, these items are added to the bank. When a new construct is needed, we add items for that construct. For details about the question bank development process please contact us.

Latest version of the question bank (in Swedish)

As other questionnaires have been developed (see below), question banks for these questionnaires have also been developed using the same developmental principle.

Currently there are three additional question banks for exit polls, employability and alumni surveys. To some extent, these question banks use the same constructs as the initial question bank, but some constructs are not relevant and others are added.

Development of an exit poll, alumni survey, student barometer and employability survey

Between 2015 and 2017 the Evaluation Unit was assigned to develop a graduate employability survey, an exit poll, and an alumni survey to be used by all KI’s undergraduate programs.

To develop these, we used a similar process as the one described above. We conducted a literature review, gathered questionnaires used at other universities and different programs at KI, and formed a reference group. All questionnaires have been tested by a group of experts, with cognitive interviews, and psychometrically through pilot tests. For more details on the development process for these three questionnaires, please contact us for the reports.

A Student barometer is conducted regularly at KI. Previously, this had been a questionnaire administered to all registered students, conducted by an external evaluation company.

In 2017 the Evaluation Unit was assigned to conduct the survey and it was suggested another method be used, to deepen our understanding of some of the themes that were identified as problematic in previous years. Hence, last year's Student barometer was conducted via focus groups with students. We developed the themes for the interviews based on the findings from previous years as well as feedback from student representatives and a gap analysis of constructs missing in the other questionnaires.

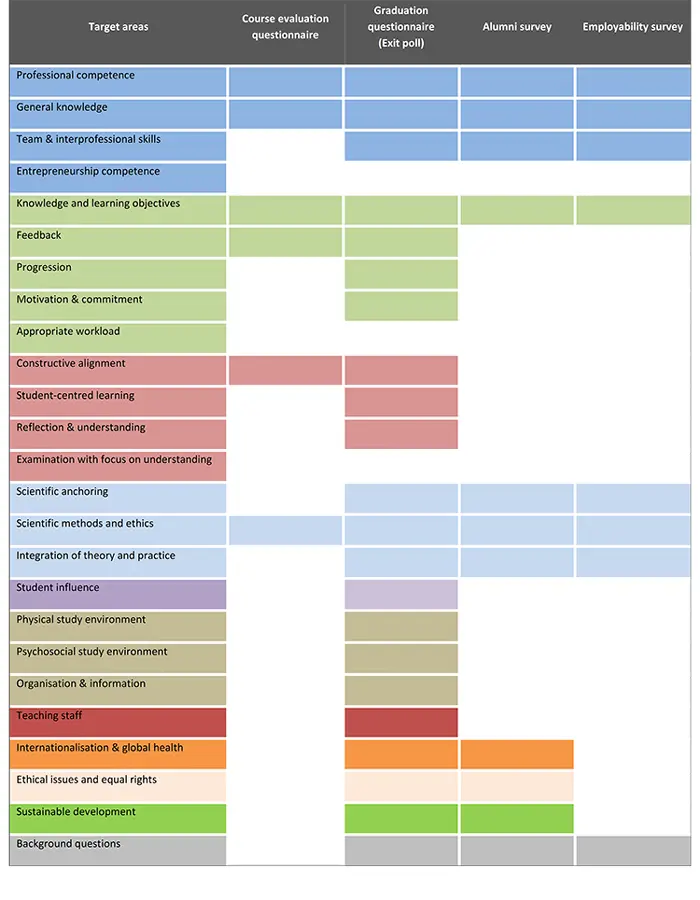

What does our system measure?

Now that all of the parts of the system are in place, we can use our target areas or constructs to capture what we actually measure.

We help programs map data from all sources with the constructs in the question bank, hence gaps can easily be identified.

A program might wish to focus for example on sustainable development or constructive alignment in their quality improvement for the coming year. They can thus make a selection of questions measuring the chosen constructs, in for example course evaluations, exit poll, special questionnaires for each semester or clinical placements etc.

The guidelines and KI’s coherent quality system

As part of the implementation process of the new three-tier evaluation system, and to emphasize how the questionnaire data can be used in quality improvement, in collaboration with the Drafting Committee for Quality System (Beredningsgruppen för Kvalitetssystem) we wrote new guidelines for quality in education.

The new guidelines were implemented in 2015 and include instructions for a yearly quality plan. Based on their annual evaluation data, each year all programs agree upon improvement areas and relevant actions, such as a change of teaching method or more constructively aligned examinations.

The question bank can be used to select further questions used at level two in the three-tier system to follow up on the activities. Activities can also be established to further explore areas where the data are unclear or inconclusive and the question bank used to clarify these areas.

Many programs work more closely with the Evaluation Unit to develop their quality plans and annual cycles for data collection and follow-up.

In 2017 the Drafting Committee for Quality Systems updated the guidelines for quality assurance of undergraduate courses and programs.

The guidelines align with KI’s coherent quality system which was agreed upon in 2017. For this system, data from the standardized course evaluation questionnaires are used as quality indicators. Hence, the three-tier system is used in quality plans at program level but also in the overarching quality system at KI.

The Committee for Higher Education and the Committee for Doctoral Education commissioned the Evaluation Unit to develop methods for evaluating, managing, analysing and reporting on several aspects of educational quality 2014-2020. The Evaluation Unit was responsible for investigating self-perceived aspects of educational quality from the perspective of both internal (students and staff) and external (employer) stakeholders. We did this through student course evaluations, student barometer, exit polls, alumni inquiries and future employee surveys. From January 2021 UoL (Undervisning och Lärande/Teaching and Learning) are managing the quality system.

Our current work

Acknowledgements

The development of the standardized questionnaire, the exit poll, alumni survey, student barometer and annual summaries are commissioned by the Board of Education (now the Committee for Higher Education), through Drafting Committee for Quality System (Beredningsgruppen för Kvalitetssystem).

The Committee for Higher Education (previously the Board of Education) do not commission any research related to the quality system (such as the more advanced validation studies).

Current and previous team members in the evaluation unit: Louise Bergman, Elizabeth Blum, Anna Bonnevier, Aleksandra Sjöström Bujacz, Tove Bylund Grenklo, Eva Macdonald, Sivan Menczel, Per Palmgren, Linda Sturesson, Zoe Säflund, Teresa Sörö, and Terese Stenfors.